Get ready to unlock the full potential of your AI models with enhanced context, security, governance, data quality, and business SLOs through the power of the data product layer.

The Surge in AI Adoption

According to McKinsey, 65% of organizations currently use Gen AI, whereas 83% of companies claim that AI is a topmost priority in their futuristic business plans. The growing importance of Large Language Models (LLMs) or GenAI Models across industries has highlighted their potential in transforming day-to-day tasks, including programming, natural language processing in customer-facing applications, and data analysis. Organizations are increasingly integrating AI into core business functions, driven by the potential for substantial competitive advantages.

This surge in adoption is particularly evident among AI performers, who utilize AI not only for efficiency and cost reduction but also to innovate and create new revenue streams. These leading organizations are setting the pace by embedding AI deeply within their operations, investing heavily in AI capabilities, and employing advanced practices to scale AI effectively. As AI continues to evolve, its adoption is expected to accelerate further, reshaping industries and driving transformative changes in business strategies and operations.

How LLMs are Helping Businesses Scale

LLMs are versatile tools capable of handling a wide range of tasks due to their training on vast datasets and ability to understand and generate human-like text. Here are some areas where LLMs excel:

- Natural Language Processing: Engaging in conversations, text completion, and content creation like articles and stories.

- Language Translation and Summarization: Translating text between languages and condensing long texts into shorter summaries.

- Question Answering and Sentiment Analysis: Providing accurate answers to questions and analyzing emotional tones in text.

- Content Personalization and Creative Writing: Generating personalized recommendations and creating imaginative stories and poems.

- Educational and Programming Assistance: Explaining complex topics, helping with homework, generating code snippets, and debugging.

- Data Analysis: Interpreting data and generating insights for decision-making.

These capabilities make LLMs valuable across various industries, including technology, education, healthcare, entertainment, and more.

According to VentureBeat analysis, despite all the use cases that AI and ML can offer industries, 87% of AI projects never go into production. That's shocking! Ensuring the accuracy and reliability of LLMs remains the most significant challenge due to their complexity and the vast amount of unstructured data they process with a lack of domain-specific context and biased responses. Let's understand this in detail.

Navigating the Challenges of Working with LLMs

Numerous public and internal Large Language Models (LLMs) have been criticized for their inconsistent performance. Enterprises building their LLMs encounter substantial challenges, such as a lack of domain-specific context and biased responses. Despite these issues, organizations continue to invest in LLMs to revolutionize customer interactions, optimize operations, and enable innovative business models for enhanced growth.

Let's first understand how LLMs function and why these issues occur so frequently.

LLMs are trained on vast amounts of unstructured data; they learn about the data and store this information as part of weights and biases in their neural network; this information includes the language understanding and the general knowledge provided in the training data.

Fundamental 1: LLMs work on the knowledge they have been trained on - a question asked outside that knowledge may receive an inaccurate response. When we use these LLMs for Enterprise use cases, we must know that they may not understand your domain-specific questions completely and will most likely hallucinate.

So, we need to make them aware of the domain knowledge to answer our specific questions, and that's why RAG (Retrieval Augmented Generation) is becoming the most popular way of providing domain knowledge to LLMs. But vanilla RAG can only go so far.

Fundamental 2: LLM primarily operates on the principle of "Garbage in, Garbage out." The higher the quality of data it is trained in, the better the result it will give. Unfortunately, off-the-shelf LLMs are not prepared with structured and relevant data for enterprise use cases. However, by providing high-quality structured data as a part of the context, LLM can do a great job.

The most popular use case for enterprises is to be able to query their extensive set of tables and data lakes using LLMs. Here are two broad ways in which LLMs are being used in enterprises today-

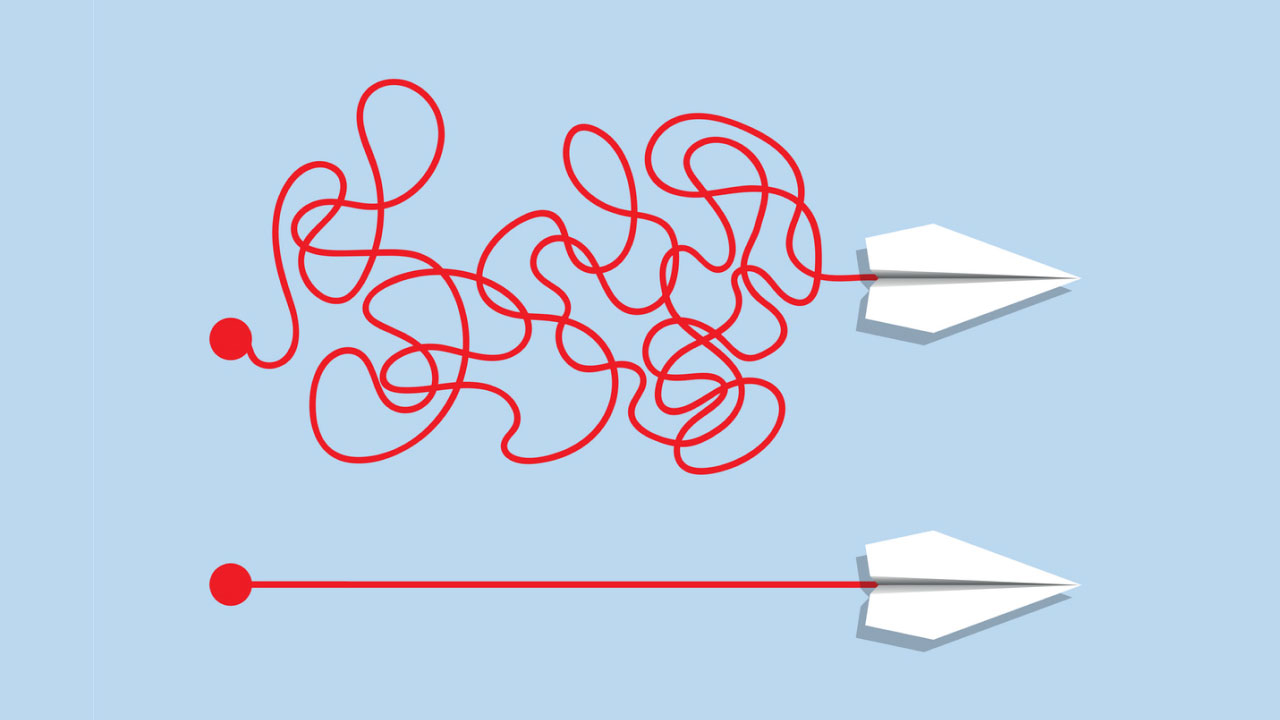

Scenario 1: Unorganized Data Pools

Organizations often give LLMs access to unorganized data sources, assuming AI will seamlessly process this data and provide accurate responses. However, this assumption is flawed. LLMs struggle to generate precise and optimized queries without a structured data framework, leading to inefficient SQL queries, poor performance, and increased computational costs. Simply providing a database schema is insufficient; LLMs need detailed contextual information to generate accurate SQL queries. Without understanding the metrics, measures, dimensions, entities, and their relationships, the queries produced by LLMs are often inefficient and inaccurate.

Scenario 2: Organized Data Catalogues

Organizations might organize their data into proper catalogs with defined schemas and entities before feeding it to LLMs. This structured approach allows LLMs to understand better the source and nature of the data they are querying. While this method improves accuracy and efficiency, it comes with its own set of challenges. The catalog needs continuous updates to manage the system, requires a lot of data movement, and the upfront costs of organizing and cataloging large datasets are substantial in terms of time and resources. Despite the structured data, LLMs still face difficulties comprehending the full context and semantics of the data, leading to potential inaccuracies.

Understanding The Root Cause of the LLM Problems

Large Language Models (LLMs) face several challenges that can lead to perceived failures in their performance. Here are some of the main factors contributing to the limitations of AI projects in production:

- Lack of Contextual Understanding: LLMs can struggle with maintaining context as they do not inherently understand the semantics of user queries or the specific business domain. This lack of deeper comprehension can generate incorrect or irrelevant SQL queries. For instance, different teams might use different jargon or naming conventions to define a table or column, such as customer names represented as cust, customer_name, or C-Name. An LLM might struggle to make these connections without additional context. This gap between raw data and meaningful interpretation can lead to misunderstandings and inaccuracies in AI-driven insights and decisions.

- Poor Data Quality: The effectiveness of an LLM depends heavily on the quality of the data on which it was trained. The model's outputs can be flawed if the training data is inaccurate, incomplete, or noisy. For instance, if the LLM was trained with inconsistent or outdated information about database schemas or column names, it might generate SQL queries that do not match the current database structure. This can result in errors or missing information when users request data, as the LLM may not generate queries that align with the actual database setup.

- Privacy Issues: LLMs can inadvertently expose sensitive information in their training data, potentially compromising privacy and security. For instance, if a user queries, "Retrieve customer details with recent transactions," and the LLM has been trained on sensitive customer data, there is a risk that confidential information could be revealed unintentionally. This could happen if the model generates SQL queries that access sensitive data without appropriate safeguards, raising concerns about privacy and protection.

- Technical Debt and Infrastructure: The deployment of LLMs can be hindered by compatibility issues with existing legacy systems, insufficient computational resources, or inadequate infrastructure. For instance, integrating an LLM-based SQL generator into a traditional database system might be challenging if the system relies on outdated technologies or lacks the computational power to handle real-time query generation. This can impede the effective implementation and scaling of AI solutions, leading to operational inefficiencies and limitations.

These factors collectively contribute to the difficulties encountered by LLMs, resulting in perceived failures or limitations in their performance and reliability. But how do we bring all these pieces together effectively?

The Solution: Building LLMs Powered by Data Products

Enter the data product era! A data product is a comprehensive solution that integrates Data, Metadata (including semantics), Code (transformations, workflows, policies, and SLAs), and Infrastructure (storage and compute). It is specifically designed to address various data and analytics (D&A) and AI scenarios, such as data sharing, LLM training, data monetization, analytics, and application integration. Across various industries, organizations are increasingly turning to sophisticated data products to transform raw data into actionable insights, enhancing decision-making and operational efficiency. Data products are not merely a solution; they are transformative tools that prepare and present data effectively for AI consumption—trusted by its users, up-to-date, and governed appropriately.

DataOS, the world's first comprehensive data product platform, enables the creation of decentralized data products that deliver highly contextual data, enhancing the performance of LLMs such as Llama, Databricks, or OpenAI. DataOS takes your data strategy to the next level by catering to a slice of data required to power your LLMs. The Data Product Layer sits between your raw data layer and consumption layer and performs all those critical tasks needed to improve the accuracy and reliability of your LLMs. These core tasks include - enriching metadata to make data more contextual, creating semantic data models without moving any data, and applying governance policies, data quality rules, and SLOs. On top of this, it also provides an infrastructure layer to autonomously run these data products without requiring any dependency on your DevOps or development team.

Now let's understand in detail how a DataOS's Data Product Layer can work as a solution to all your LLM problems that we just discussed –

Providing Rich Contextual Information

DataOS provides over 300 pre-built connectors to integrate with your raw data layer that covers various data sources, including data lakes, databases, streaming applications, SaaS applications, and CSVs. Once all relevant data sources are connected, all the metadata gets scanned from these systems. Metadata enrichment becomes crucial, allowing users to add the descriptions, tags, and glossary terms for each attribute, making unstructured source data meaningful. This ensures that duplicate data stored across different systems with pseudo names are accurately understood and used with proper context.

DataOS Glossary serves as a common reference point for all stakeholders, ensuring everyone understands key terms and metrics consistently. By providing descriptions, synonyms, related terms, references, and tags for each business term, the Glossary helps maintain data consistency. While AI models can generate synonyms, their accuracy is often low. Synonyms defined under the Glossary are extremely helpful for models to match different tables or columns stored across various sources accurately. Tags enrich metadata by adding descriptive information, enhancing searchability, context, and data discovery, thereby supporting data governance and user collaboration.

Elevating Data Quality

We can run data profiling workflows to check the incoming data's completeness, uniqueness, and correctness beforehand so that we can easily avoid using bad data for analytics or training LLMs.

We can build a logical model once we have all the enriched metadata. This logical model focuses on entities, attributes, and their relationships without considering physical storage details, ensuring data integrity and reducing redundancy.

DataOS simplifies the creation of logical models specific to a data product by defining relationships between entities and including measures and dimensions for each. This process requires no physical data movement, as it deals solely with enriched metadata. By perfectly mapping the logical model to physical data, DataOS ensures accurate and complete data, significantly enhancing data quality. Transforming unstructured data into a structured format enables faster query responses and facilitates exploratory data analysis.

When a user queries the LLM model, it utilizes the semantic model to access all required data instantly, as the data is now well-structured with proper semantics and context. This results in clean and brief queries, avoiding complex JOINs and sub-optimized queries.

Enhancing Security and Governance

The DataOS data product platform addresses privacy issues by incorporating robust data governance and security policies that prevent the accidental exposure of sensitive information. By employing robust access and data policies (masking and filtering), DataOS ensures that access to sensitive data is tightly controlled and monitored. Only authorized personnel can access sensitive PII information with these policies in place. This prevents the LLM from generating SQL queries that expose confidential data without appropriate safeguards.

Switching to Open, Scalable, and Composable Data Infrastructure

DataOS mitigates technical debt and infrastructure challenges as it seamlessly integrates with your existing infrastructure, including legacy systems, without necessitating a rip-and-replace of your current data stack. DataOS is designed to bridge the gap between traditional databases and modern AI technologies, ensuring compatibility and enhancing computational efficiency.

Leveraging advanced metadata management and optimized data processing capabilities, DataOS enables real-time query generation without overburdening legacy infrastructure. This ensures that organizations can deploy LLM-based solutions smoothly, minimizing operational inefficiencies and unlocking the full potential of AI. Furthermore, every data product comes with storage and compute provisioned during development. Hence, the data consumers can autonomously consume data products without depending on your DevOps or development team.

Additionally, DataOS offers a Data Product Hub, a marketplace for data products to make it easy for data consumers to autonomously access and consume data required to power their use case. Each data product available on the Data Product Hub contains schema, semantics, SLAs (response time, freshness, accuracy, and completeness), quality checks, and access control information that helps you power any AI model with accurate, reliable, and trustable data.

Optimizing LLM Performance: The Benefits of Using a Data Product Layer

Using a data product layer on top of your existing data infrastructure significantly enhances the performance and accuracy of large language models (LLMs) in several ways:

- Enhanced Context and Understanding: The data product layer provides LLMs with a comprehensive framework of data relationships and hierarchies, enabling more accurate interpretations and reducing errors.

- Efficient Query Generation: By organizing data with enriched metadata, the data product layer allows LLMs to generate highly accurate and effective queries, mitigating the risks of poorly structured inputs.

- Improved Data Quality and Governance: Data products ensure high data quality and robust governance, providing LLMs with accurate, consistent, and well-governed data, thus enhancing model performance and reliability.

- Scalability and Flexibility: Creating reusable and comprehensive data products with modular architecture provides complete scalability and adaptability to evolving business needs.

- Cost Efficiency: The comprehensive data products and semantic data modeling reduce computational overhead, lowering costs for data processing and query execution.

- Enhanced User Experience: Accurate, contextually relevant, and easily interpretable data leads to more meaningful and actionable insights from LLMs, improving overall user experience.

In the era of AI-driven decision-making, the ability to deliver high-quality, context-rich data to LLMs is not just an advantage—it's a necessity. As we look to the future, thriving companies will effectively harness these advanced data management capabilities, turning their vast data resources into actionable insights and competitive advantages.

In conclusion, integrating a Data Product Layer with your existing data infrastructure represents a significant advancement in leveraging Large Language Models (LLMs) for enterprise data management. This powerful combination enhances LLMs' contextual understanding and query precision and ensures robust data governance, scalability, and operational efficiency. In navigating the complexities of big data and AI, organizations benefit from solutions like DataOS, which help in building and managing the data products in a decentralized way.

Curious to learn how DataOS can elevate your data strategy and drive better business outcomes? Contact us today!

.png)

.jpeg)