The past few decades have seen an explosion in the number of applications designed to help organizations manage data. It has become common knowledge that data is valuable—each shiny new data application promises to solve a problem, and if organizations are lucky, multiple problems.

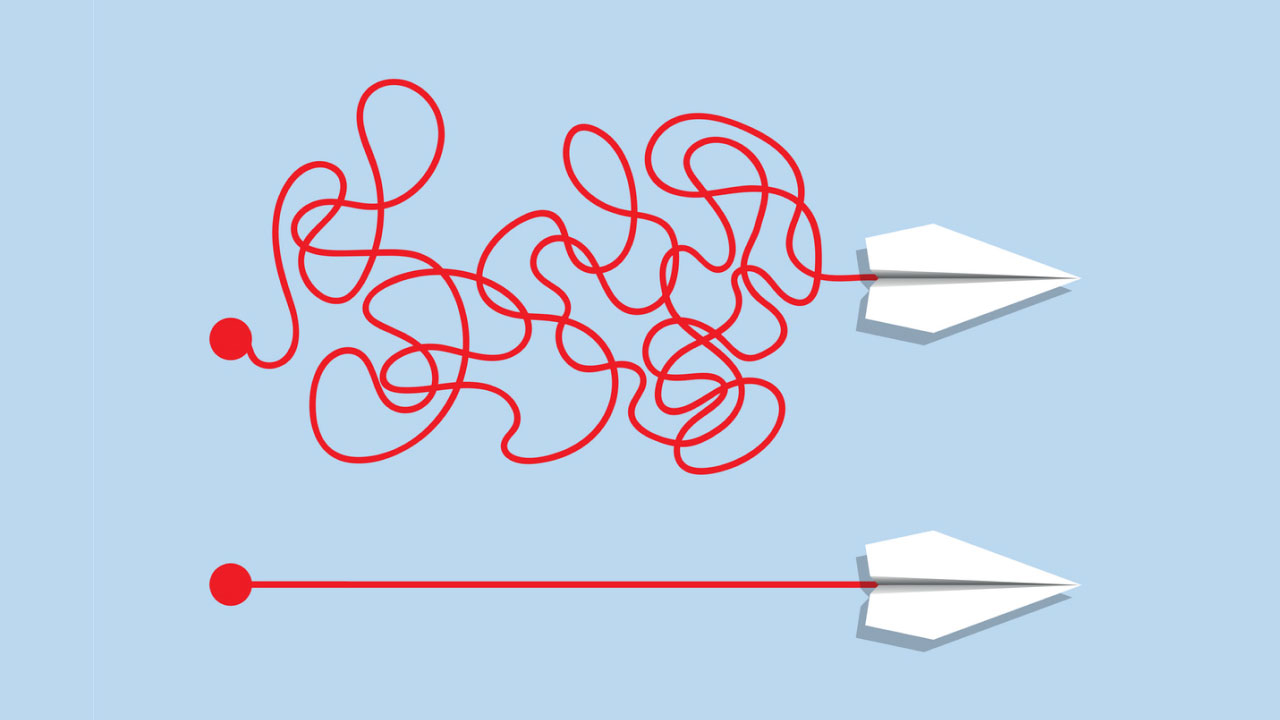

Unfortunately, there’s a catch. Applications create their own ecosystems that must be integrated and maintained, and are ultimately siloed for security and governance. They become little islands, solving problems in the present time but creating problems down the road. Integration is now a nightmare.

There’s now a better way to manage data and eliminate the frustration of integration.

The Challenge of Current Data Solutions

Imagine this is health care. Someone goes to their doctor for two or three annoying symptoms. Instead of finding the underlying cause of those symptoms, the doctor prescribes a medicine that addresses each symptom individually. After a few months, the patient has developed other symptoms as side effects of the medication, but this patient can’t stop taking the pills because the original symptoms return. The doctor continues to treat each symptom individually until the patient is consuming numerous daily medications and still has one underlying condition unchecked, untreated, and getting worse.

This is the state of data for many organizations. Each app is a medication that must continue to maintain the status quo, but the underlying issues with data management rage underneath. Remove one component, and the entire system collapses.

That’s (hopefully) not how most health care goes, and it certainly shouldn’t be a good analogy for data. Instead of treating symptoms with data challenges, it’s time to treat the whole condition — fractured, obsolete, siloed, risky data integrations.

A New Framework for Utilizing Data

Data should be focused on operationalization rather than solely on analytics. Data solutions must have an operational layer attached to the analytical layer so that data solutions aren’t simply endpoints. The components are composable, allowing businesses to add or remove components freely as their needs change and providing the intuitive scale business desperately needs.

The solution is a data fabric. It becomes the operational layer, transcending the problem of integration by providing an entirely new way to think about data infrastructure. This happens in three different ways:

A model-map-load approach

Scale is a challenge because businesses are still reinventing the wheel for every request. ELT and ETL models repeatedly rebuild pipelines, slowing down the process and creating backlogs for enterprises with massive large-scale data.

Instead of forcing IT to micro-manage data, rebuild pipelines for every request or “own” data, automation allows data to scale. Without true automation, the system is too unwieldy, too disparate to manage safely.

A new approach to governance

Attribute-based access controls allow DataOps to expand without risking loopholes. This new era of data needs a governance engine worthy of it. With granular governance, security is just as customizable as the framework itself.

Organizations should be able to decide what governance looks like at the atomic level without worrying that shifting in one area collapses another. Current governance tools are too rigid; this is a revolutionary approach.

Bring back observability

As data tools progressed, observability decreased. Organizations fed data into a void, and the void spits out insights. For data to scale, there must be radical observability. It allows teams to automate pipelines, ensure governance, and remove rigidity.

Data fabric transcends integration

With a scalable data fabric, business doesn’t have to cause an uproar by purchasing yet another solution. A data fabric is not another application. It’s the operational layer that finally unlocks the full potential of every application. It’s an overlay that connects data points no matter the source, with no need to worry about integration issues.

Data fabrics aim to treat the underlying condition. It productizes data and allows it to move freely. With the DataOS fabric, organizations receive a revolutionary governance strategy. Teams gain ownership of data and push only what they need. There’s no need to store or move all the data available, so organizations reduce computing and warehousing costs.

It solves three major integration issues:

- No more silos – The nightmare of integration is managing applications that don’t work together or feature-limited integration. A data fabric brings applications together, pulls data from a single source of truth, and ensures data quality no matter where the data resides.

- For the first time: flexibility – A data fabric creates a composable system through the operational layer. As organizations add or remove pieces, the structure remains intact. It scales, and it creates true real-time insight.

- No more data copying – Instead of endlessly copying data and losing track of iterations, a data fabric allows users to access a single source of truth. These links give users exactly the data they need and no more while ensuring security and governance remain intact down to a granular level.

Deploying a data fabric is easier than you think

A data fabric transforms data from a business expense to a business asset. With a data fabric like DataOS, companies can tap into the insights data offers in less time and with less cost.

A data fabric isn’t just another application. It won’t throw the organization into an upheaval that takes a year or more to smooth out. Instead, businesses could transform their data in just six weeks. Plus, it can multiply ROI by as much as ten times at just one-tenth of the cost.

If it sounds too good to be true, take a look at a real company finding real results by rethinking data beyond the ETL. Contact The Modern Data Company to find out how.

.png)

.jpeg)